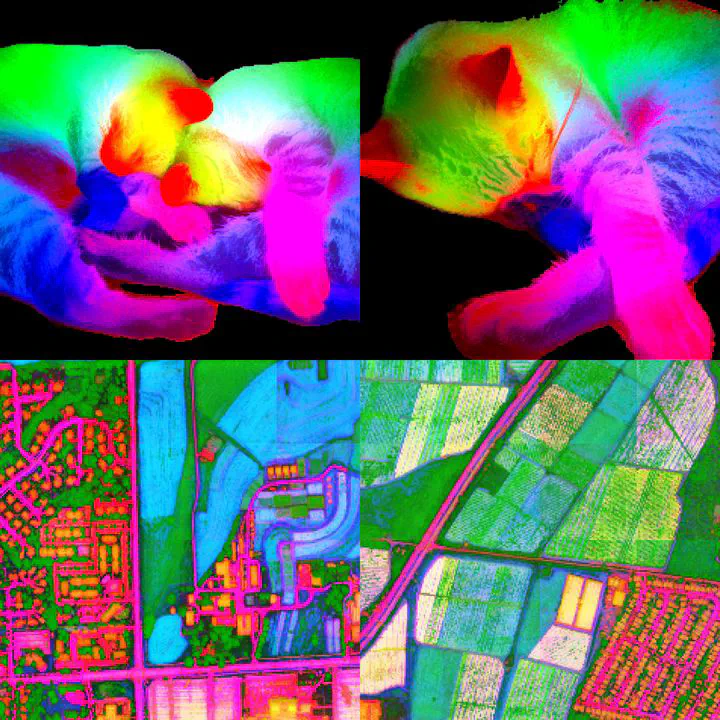

DINOv3 dense features on natural and aerial images.

DINOv3 dense features on natural and aerial images.Abstract

Self-supervised learning holds the promise of eliminating the need for manual data annotation, enabling models to scale effortlessly to massive datasets and larger architectures. By not being tailored to specific tasks or domains, this training paradigm has the potential to learn visual representations from diverse sources, ranging from natural to aerial images – using a single algorithm. This technical report introduces DINOv3, a major milestone toward realizing this vision by leveraging simple yet effective strategies. First, we leverage the benefit of scaling both dataset and model size by careful data preparation, design, and optimization. Second, we introduce a new method called Gram anchoring, which effectively addresses the known yet unsolved issue of dense feature maps degrading during long training schedules. Finally, we apply post-hoc strategies that further enhance our models’ flexibility with respect to resolution, model size, and alignment with text. As a result, we present a versatile vision foundation model that outperforms the specialized state of the art across a broad range of settings, without fine-tuning. DINOv3 produces high-quality dense features that achieve outstanding performance on various vision tasks, significantly surpassing previous self- and weakly-supervised foundation models. We also share the DINOv3 suite of vision models, designed to advance the state of the art on a wide spectrum of tasks and data by providing scalable solutions for diverse resource constraints and deployment scenarios.

DINOv3 🦖🦖🦖

DINOv3 is a significant leap forward in self-supervised vision foundation models. This major release sets a new standard, offering stunning high-resolution dense features that are set to revolutionize various vision tasks.

While we scaled both model size and training data, DINOv3’s true innovation lies in key architectural and training advancements.

What’s inside DINOv3?

DINOv3 is packed with features and techniques:

- A powerful 7B ViT foundation model, complemented by smaller distilled models.

- Trained on a massive dataset of 1.7 billion curated images, remarkably without any annotations, showcasing the power of self-supervision.

- The introduction of Gram anchoring, a novel technique that effectively addresses feature map degradation, a common challenge when training models of this scale for extended periods.

- Achieving high-resolution adaptation through the use of relative spatial coordinates and 2D RoPE, enabling exceptional performance on high-resolution inputs.

Dense Feature Degradation and Gram Anchoring

As we scaled up model size, number of images, and training duration, we observed two phenomena:

- Downstream classification accuracy continued to improve.

- Performance on dense tasks, which rely on rich, consistent feature maps, would rapidly drop.

For example, see the comparison of ImageNet-1K classification accuracy vs. Pascal VOC segmentation mIoU:

From qualitative observations, we notice that early during training, dense features are nicely localized and consistent within object boundaris. However, as training progresses, they become progressively more noisy.

To overcome this roadblock, we introduce Gram anchoring. Our goal is to achieve consistency found in early training iterations while retaining the semantic richness developed during late training. Therefore, we load an early checkpoint of the model being trained, and use is as a second teacher. Instead of forcing the features of the student to match the teacher features perfectly, which would be a too strong constraint, we distill the high-resolution pairwise patch similarity, i.e. the Gram matrix, from the teacher. Critically, this still allows the student features to evolve freely and improve, enabling unprecedented performance.

Upon introducing Gram anchoring, we qualitatively observe an improvement in the feature maps:

And quantitatively, we see a significant boost in performance on dense tasks:

High-Resolution Adaptation

Now that we can train the model for longer, we should also think about training it on high-resolution images. For the longest part, we feed the model images of size $256{\times}256$, which we increase to $512{\times}512$ and $768{\times}768$ in the final training stages.

Our constant-schedule training approach allows us to spin off a model specifically for high-resolution adaptation at any point. We seamlessly increase the input resolution while keeping Gram anchoring enabled. In our implementation, we use relative spatial coordinates in the range of $[0,1]^2$ to represent the position of each patch in the image for the 2D rotational positional encoding (RoPE). As a consequence, images of higher resolution are simply treated as an interpolation of the patch coordinates, without special handling.

How well does it work?

Qualitative Visualization

Compared to other vision foundation models, both self-supervised and not, DINOv3 features are more consistent and semantically rich. The quality of DINOv3’s feature maps is truly exceptional.

In the crowded market scene below, we visualize the cosine similarity between the patches marked with a red cross and all other patches.

Compared to other vision foundation models, both self-supervised and not, DINOv3 patch features are more consistent, artifact-free, with no unwanted spilling around the edges of objects. Object contours look so precise, it appears like object segmentation is just one clustering step away!

Below, we visualize the first three PCA components of the features as RGB:

After high-resolution adaptation, DINOv3 models generalize well to a wide range of resolutions, even higher than those seen during training. Forget Full-HD, it’s time for dense features in 4K!

Performance Evaluation

Even with a frozen backbone, DINOv3 delivers massive performance gains across a variety of benchmarks. Some examples include:

- COCO detection: achieving a state-of-the-art 66.1 mAP with a frozen backbone and detection model based on Plain-DETR. This is incredible!

- ADE20k segmentation: a linear 55.9 mIoU, a significant +6 improvement over previous self-supervised methods, and 63.0 mIoU with a decoder on top.

- 3D correspondence: impressive 64.4 recall on NAVI.

- Video tracking: a strong 83.3 J&F on DAVIS, thanks to its ability to handle high-resolution inputs.

Below, is an example of segmentation tracking on a video. Object instances in the first frame of the video are segmented manually (thanks SAM 2!). Subsequent frames are automatically segmented by propagating labels from the first frame based on patch similarity in the DINOv3 feature space.

Model Family

DINOv3 comes with a versatile model family to suit various needs:

- The powerful ViT-7B base model.

- A range of ViT variants: ViT-S/S+/B/L/H+ with parameters ranging from 21M to 840M.

- ConvNeXt variants optimized for efficient inference.

- A text-aligned ViT-L (dino.txt), opening up exciting possibilities for multi-modal applications.

Also, by popular demand, we switched to patch size 16! No more patch size 14 and weird image sizes like $224{\times}224$ and $448{\times}448$.

The DINOv3 recipe exhibits remarkable generalization beyond natural images. We train a model from scratch on aerial images, setting a state of the art on geospatial benchmarks like canopy height estimation and land cover classification. This demonstrates the pure magic of self-supervised learning: the same recipe, applied to a different domain, yields incredible results.

We are committed to open-source and we release the DINOv3 models with a free commercial license. Find everything you need to get started:

- Training and evaluation code, adapters, and notebooks in the GitHub repository.

- Pretrained backbones in this HF collection compatible with the Transformers library.

- The official blog post.

- The research paper on arXiv.

Twitter thread

Say hello to DINOv3 🦖🦖🦖

— Federico Baldassarre (@BaldassarreFe) August 14, 2025

A major release that raises the bar of self-supervised vision foundation models.

With stunning high-resolution dense features, it’s a game-changer for vision tasks!

We scaled model size and training data, but here's what makes it special 👇 pic.twitter.com/VBkRuAIOCi