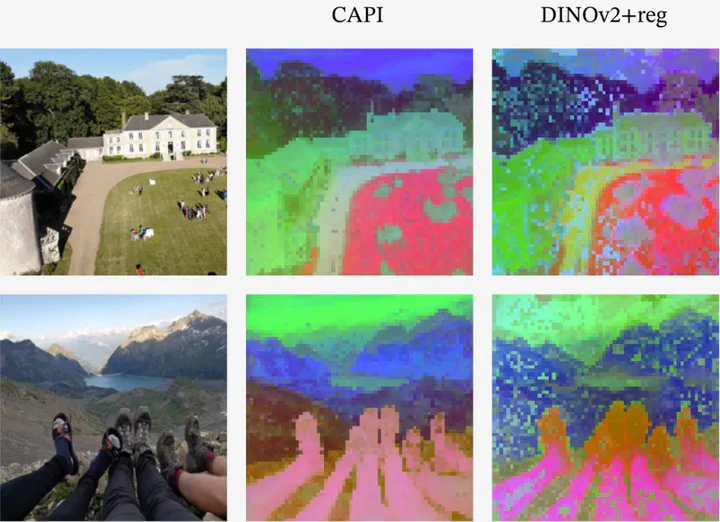

Visualization of learned patch features. Comparing CAPI vs. DINOv2 with registers.

Visualization of learned patch features. Comparing CAPI vs. DINOv2 with registers.Abstract

Masked Image Modeling (MIM) offers a promising approach to self-supervised representation learning, however existing MIM models still lag behind the state-of-the-art. In this paper, we systematically analyze target representations, loss functions, and architectures, to introduce CAPI – a novel pure-MIM framework that relies on the prediction of latent clusterings. Our approach leverages a clustering-based loss, which is stable to train, and exhibits promising scaling properties. Our ViT-L backbone, CAPI, achieves 83.8% accuracy on ImageNet and 32.1% mIoU on ADE20K with simple linear probes, substantially outperforming previous MIM methods and approaching the performance of the current state-of-the-art, DINOv2.

Here is a tweet-sized summary by the lead author:

Want strong SSL, but not the complexity of DINOv2?

— TimDarcet (@TimDarcet) February 14, 2025

CAPI: Cluster and Predict Latents Patches for Improved Masked Image Modeling. pic.twitter.com/gOB4QO7DKn